How to Improve App Response Time in Makeup Check AI: Strategies for Lightning-Fast Beauty Tech

Discover proven techniques to improve app response time in makeup check AI, including code optimizations, model compression, and edge computing for a faster user experience.

Estimated reading time: 8 minutes

Key Takeaways

- Optimize code and caching to dramatically reduce load times.

- Compress and quantize AI models for faster on-device inference.

- Leverage edge computing and background threads to cut network hops.

- Implement continuous monitoring and A/B testing for iterative improvement.

- Use client-side preprocessing to streamline real-time makeup analysis.

Table of Contents

- I. Introduction

- II. Importance of App Response Time

- III. Challenges

- IV. Strategies

- V. Integrating AI

- VI. Testing and Monitoring

- VII. Conclusion

I. Introduction to Improve App Response Time in Makeup Check AI

Users expect beauty apps to react in a flash. When they snap a selfie, they want instant makeup tips. That’s why it’s vital to improve app response time makeup check ai.

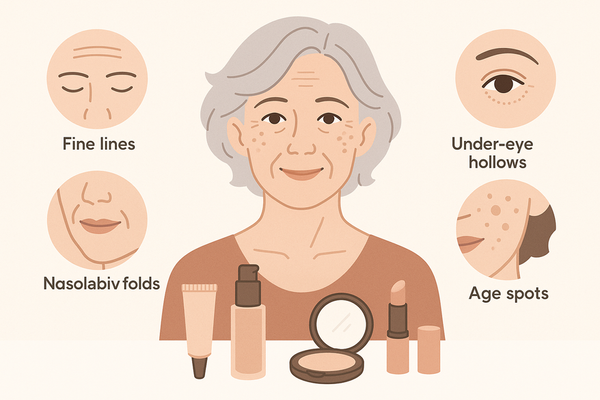

App response time is the duration from a user action—like uploading a selfie—to seeing results. Makeup check AI uses computer vision to check symmetry, coverage, and color, then suggests fixes in real time.

Fast feedback sets top beauty apps apart. Research shows millisecond-level responses boost user trust and keep them engaged. A Miquido study notes that real-time AI features can drive sales and retention, while users will abandon tools that lag over one second according to an AFB review.

For instance, leading apps such as Makeup Check AI optimize their image-processing pipelines and model deployments to maintain sub-second feedback loops.

For insights on seamless real-time adjustments in AI beauty tools, see real-time makeup adjustment techniques.

II. Importance of App Response Time in Makeup Check AI

Fast response time directly impacts both user experience and business outcomes.

User Experience & Retention

- Users expect sub-second feedback when applying virtual makeup.

- Delays over one second lead to frustration and drop-offs.

- Seamless interactions build confidence in AI-driven beauty advice.

Business Impact

- Every extra second of delay can cut conversions by about 7%.

- Real-time AR and AI tools raise average order values.

- Speedier apps see higher retention and more in-app purchases.

Makeup Check AI Context: Precise guidance on lipstick, eyeliner, or blush relies on instant analysis. Lag undermines the perceived value of real-time makeup tips.

III. Challenges in Improving App Response Time

Several technical and domain-specific factors can slow down your makeup check AI app.

Common Bottlenecks

- Server latency: network delays when calling cloud inference APIs.

- Inefficient code: unoptimized loops or blocking UI threads in image modules.

- Heavy resource usage: HD selfies (>2 MB) slow uploads and downloads.

AI-Specific Hurdles

- Model inference delays: deep CNNs may take 100+ ms per image on mid-range devices.

- Pre- and post-processing: face landmark detection and color histogram ops add 50–80 ms.

Makeup nuances: Lighting variance can trigger multiple scans, compounding latency. Real-time feedback needs <200 ms vector adjustments to feel instant.

IV. Strategies to Improve App Response Time

This section covers three tactic groups: general optimizations, AI model tuning, and makeup check enhancements.

General Optimization Techniques

- Code Refactoring

- Remove redundant image transforms.

- Inline critical functions to cut overhead.

- Caching Strategies

- Use an LRU cache (e.g., Redis) to store recent inference results.

- Apply HTTP caching headers for static assets like images and models.

- Asynchronous Processing

- Offload API calls and model inferences to background threads.

- Update UI via callbacks or promises to stay responsive.

- Load Balancing

- Scale inference servers behind AWS ELB or GKE to distribute traffic.

Optimizing AI Model Performance

- Model Compression & Quantization

- Prune low-importance weights.

- Convert float32 to int8 for a 75% size shrink with under 2% accuracy drop.

- Efficient Frameworks

- Leverage TensorFlow Lite or Apple Core ML for on-device inference.

- On-Device Inference

- Run models locally to avoid network hops.

Makeup Check App–Specific Enhancements

- Image Pre-Processing

- Auto-crop face regions to 224×224 px.

- Apply OpenCV denoising filters to standardize input.

- Task Division

- Client side: detect facial landmarks and run basic symmetry checks.

- Server side: perform style matching and product recommendation.

- Edge Computing

- Deploy micro-services at the CDN edge (Cloudflare Workers, AWS Lambda@Edge).

V. Integrating and Leveraging AI in Makeup Check AI

Using AI intelligently can boost both function and speed.

- AI-Driven Optimization

- Predictive prefetching of makeup templates based on user history.

- Adaptive resource allocation: switch between lightweight and full models.

- Balancing Complexity & Speed

- Quick Scan Mode vs. Deep Analysis: fast basic check followed by detailed analysis.

- Feature toggles to let users disable noncritical AI features.

- Best Practices

- Modular architecture to update AI modules without full releases.

- Continuous retraining with device telemetry for evolving efficiency.

Streamlining AI workflows is key; see AI makeup app workflow guide for a deep dive.

VI. Testing and Monitoring App Response Time

Ongoing testing and data collection are essential for sustaining lightning-fast performance.

Continuous Performance Monitoring

- Track API latency, AR frame rates, and TTI using Firebase Performance Monitoring, Xcode Instruments, or Android Profiler.

Simulated Environments

- Network Throttling

- Test on 3G, 4G, and 5G to mimic real-world conditions.

- Device Farms

- Run on BrowserStack or Firebase Test Lab to cover diverse devices and OS versions.

Iterative Improvement

- A/B testing to compare flows with and without optimizations.

- Prioritize fixes that save over 100 ms on critical paths.

VII. Conclusion & Actionable Next Steps

Recap of Core Strategies:

- Code refactoring

- Caching and asynchronous processing

- Model compression and on-device inference

- Edge computing and AI-driven prefetching

- Continuous monitoring and iteration

Action Items:

- Instrument your app today for latency tracking.

- Compress and quantize your AI models for mobile.

- Implement client-side preprocessing and edge inference.

- Set up continuous monitoring with alerts.

By balancing speed with sophisticated AI, you’ll delight users and drive business growth. Start applying these techniques now to position your beauty app as cutting-edge and lightning fast.

FAQ

-

How do I accurately measure app response time?

Use performance tools like Firebase Performance Monitoring, Xcode Instruments, or Android Profiler to track metrics such as API latency, TTI, and FPS.

-

Can on-device inference match cloud accuracy?

Modern frameworks like TensorFlow Lite and Core ML offer near-cloud accuracy. Use quantized models and balance precision with speed.

-

What is the best way to handle large image uploads?

Auto-crop and compress images client-side to 224×224 px, apply denoising filters, and leverage asynchronous uploads to reduce perceived latency.

-

How often should I monitor performance?

Continuous monitoring with real-time alerts ensures you catch regressions. Analyze data weekly and run A/B tests monthly for iterative improvements.